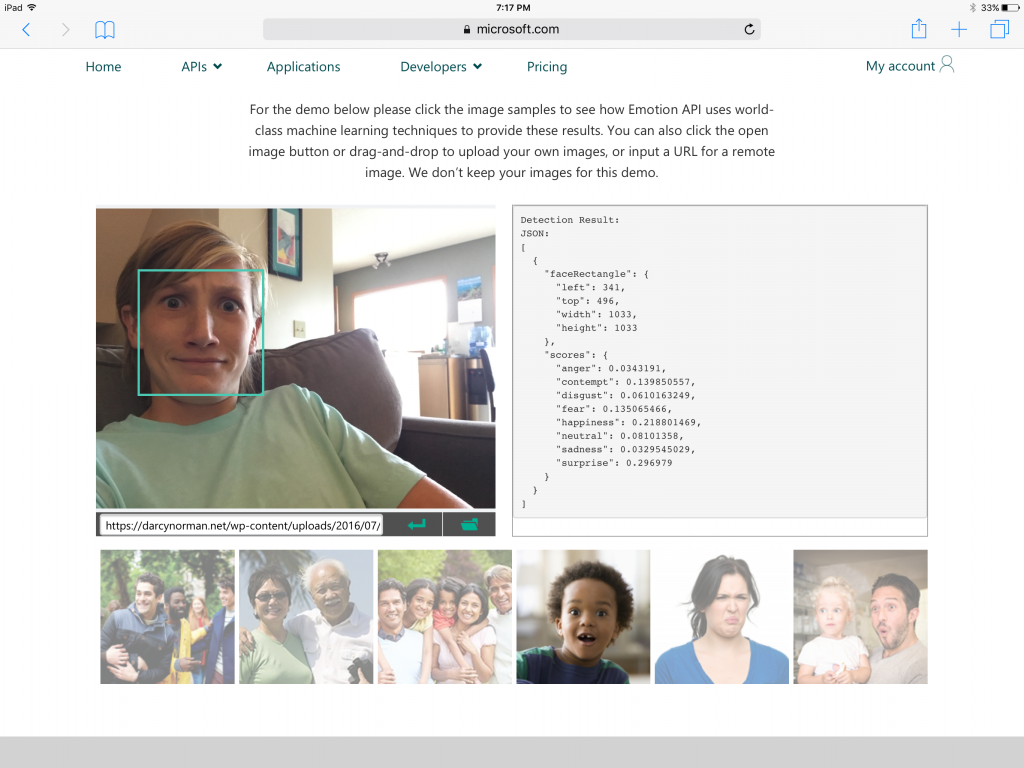

I’ve been thinking a lot about how to design a system for computational ethnography. I think the least intrusive method would be to employ machine learning and computer vision to analyze a video stream in realtime. Something like the amazing cognitive APIS coming out of Microsoft – and the projects by Microsoft Research.

I’m thinking of building a prototype with a Windows laptop running a webcam or maybe a Kinect camera.

I’m compiling some links…

My current plan is to build an application that will run on the Taylor Institute’s collaboration carts, installed on the Arrive PC (assuming windows 8 embedded can do this, on that hardware), tying into the Logitech webcam on top of each display. That vantage point would be able to record medium-close views of each collaborative group, and the video stream could be routed through the Microsoft Cognitive APIs to get the raw data that could be used to generate documentation of a session. It’s just crazy enough to work, and abstracts enough of the functionality away to make it feasible. Now, to learn how to build that kind of thing…

Update: Turns out, a computer science prof in China has been doing something similar, looking for “boredom”. Interesting, but from the linked articles, it looks like he’s trying to weaponize it for criminology and forensics. Yikes. And, looking for “boredom” is an anti-indicator of interesting things like engagement, excitement, interaction…