I don’t look at the webserver logs for this webthing in detail, but have been curious given the spikes in bot traffic over the last several months. Much of it is the traditional “I’ll just crawl your entire website multiple times per day to feed my search index”, but there’s been a LOT of “I’ll just crawl your entire website multiple times per day to feed my LLM training dataset, and I will ignore rate limiting that you’ve set in robots.txt”

This second set - the LLM-feeders - are assholes. They use dozens (or more) of separate IP addresses, hammering the webserver multiple times per second to feed their LLM-training appetite. I’ve given up on using robots.txt to coax these assholes to stop being dicks about it, and have started blocking entire ranges of IP addresses.

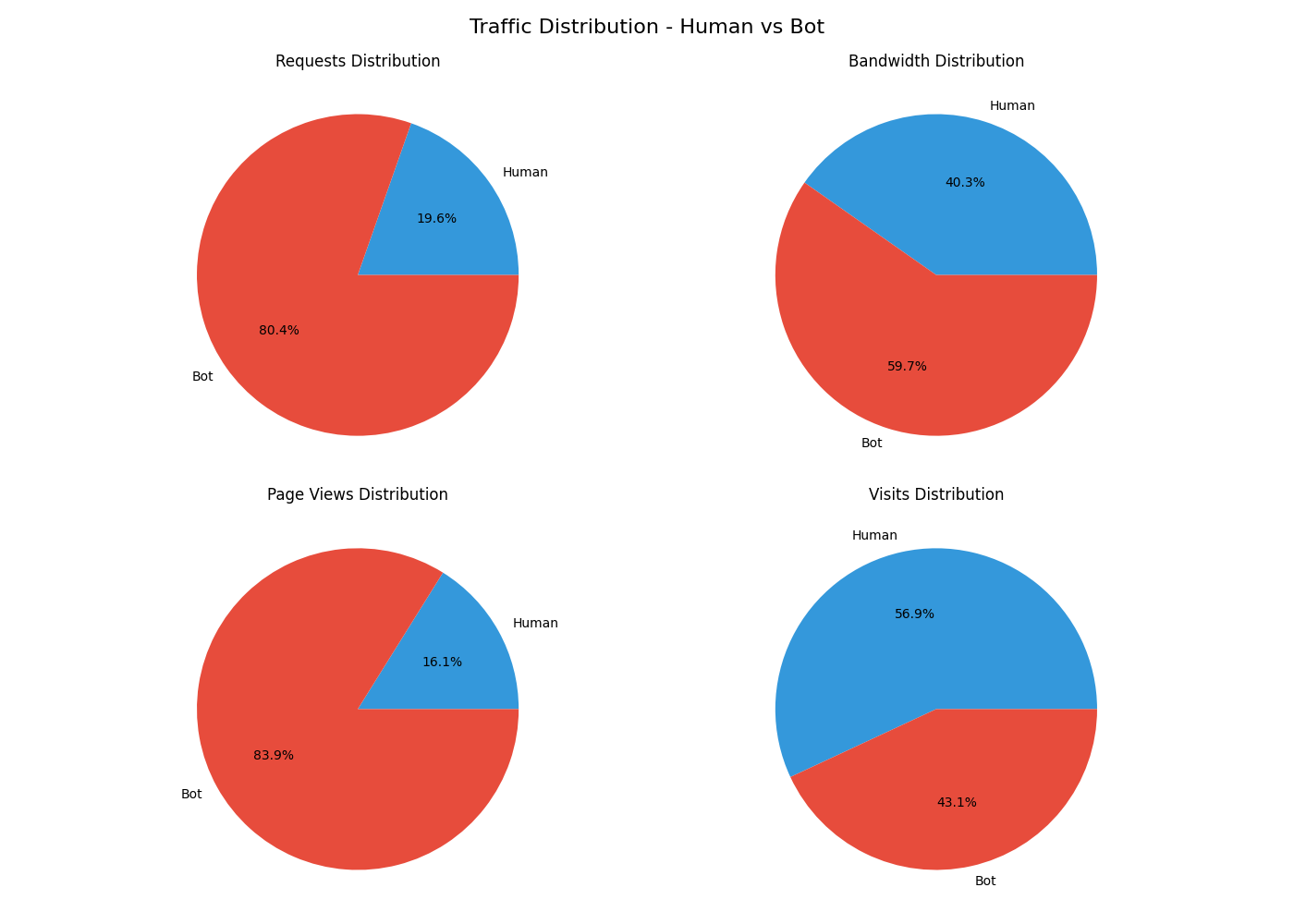

Looking at my webserver logs from April (the month before I started banning them outright), I wanted to see what proportion of website “visitors” were human vs. bots. My web hosting account provides some analytics, but the 3 packages they include don’t seem to agree on what’s going on.

So - I just vibecoded a simple python script to try categorizing visits logged in the apache log.

To run the script (I’m on macOS with brew.sh):

pip3 install pandas matplotlib user-agents tqdm

then

python3 analyze_apache_logs.py /path/to/your/apache/access_log

It ran for about 10 seconds, and generated:

Processing darcynorman.net-ssl_log-Apr-2025...

Analyzing log entries: 100%|███████████████████████████████████████████████████████████████████████████████████████████████████████████████| 421159/421159 [00:09<00:00, 42934.84it/s]

===== APACHE LOG ANALYSIS SUMMARY =====

Log file: darcynorman.net-ssl_log-Apr-2025

Total valid log entries: 421,146

Invalid/unparseable entries: 13

--- HUMAN TRAFFIC ---

Requests: 82,609 (19.6%)

Bandwidth: 5934.80 MB

Page Views: 48,933

Unique Visits: 22,157

Unique IPs: 15,207

--- BOT TRAFFIC ---

Requests: 338,537 (80.4%)

Bandwidth: 8805.94 MB

Page Views: 254,651

Unique Visits: 16,756

Unique IPs: 11,485

--- TOP HUMAN USER AGENTS ---

[6,698] Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/127.0.

[4,722] Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/114.0.0.0 Sa

[3,587] Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/117.0.0.0 Safari/537.3

[2,522] Mozilla/5.0 (Linux; Android 6.0.1; Nexus 5X Build/MMB29P) AppleWebKit/537.36 (KHTML, like Gecko) Chr

[2,114] Mozilla/5.0 (X11; Ubuntu; Linux x86_64; rv:72.0) Gecko/20100101 Firefox/72.0

[2,083] Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/605.1.15 (KHTML, like Gecko) Version/18.

[2,030] Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/135.0.0.0 Sa

[1,670] Mozilla/5.0 (Linux; Android 10; K) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/135.0.0.0 Mobile Sa

[1,639] Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/121.0.0.0 Sa

[1,538] Mozilla/5.0 (iPhone; CPU iPhone OS 13_2_3 like Mac OS X) AppleWebKit/605.1.15 (KHTML, like Gecko) Ve

--- TOP BOT USER AGENTS ---

[50,837] Mozilla/5.0 (compatible; AhrefsBot/7.0; +http://ahrefs.com/robot/)

[41,170] meta-externalagent/1.1 (+https://developers.facebook.com/docs/sharing/webmasters/crawler)

[30,413] Mozilla/5.0 AppleWebKit/537.36 (KHTML, like Gecko; compatible; GPTBot/1.2; +https://openai.com/gptbo

[18,212] Mozilla/5.0 (compatible; SemrushBot/7~bl; +http://www.semrush.com/bot.html)

[17,407] Mozilla/5.0 AppleWebKit/537.36 (KHTML, like Gecko; compatible; bingbot/2.0; +http://www.bing.com/bin

[15,087] Mozilla/5.0 (compatible; SEOkicks; +https://www.seokicks.de/robot.html)

[10,128] UniversalFeedParser/5.2.1 +https://code.google.com/p/feedparser/

[9,918] Mozilla/5.0 (compatible; AwarioBot/1.0; +https://awario.com/bots.html)

[7,308] Mozilla/5.0 (Linux; Android 6.0.1; Nexus 5X Build/MMB29P) AppleWebKit/537.36 (KHTML, like Gecko) Chr

[5,368] Mozilla/5.0 (compatible; Barkrowler/0.9; +https://babbar.tech/crawler)

Visualizations saved to: /Users/dnorman/Downloads/apache_analysis

====================================

The python script also generated a couple of visualizations - the pie charts were most useful:

So. 80% of traffic is by bots, taking 60% of the bandwidth. That makes sense - they don’t (currently) ingest the images, videos, audio, etc… That will change, at some point, and they’ll start sucking up even more of the bandwidth.

(and, yes, I get the irony of vibecoding a script to determine how leechy bots are as they feed data to try to satiate LLM training)

Comments powered by Talkyard.